In UVM-based verification environments, uvm_packer is a powerful utility for converting class objects to bit streams and vice versa. It’s commonly used for serialization and deserialization of packets. However, if you’re working with large packets or complex data structures, you might encounter a frustrating issue: data beyond 4096 bytes becomes zeroed out during packing and unpacking operations. This is the UVM packer size limitation.

The Root Cause

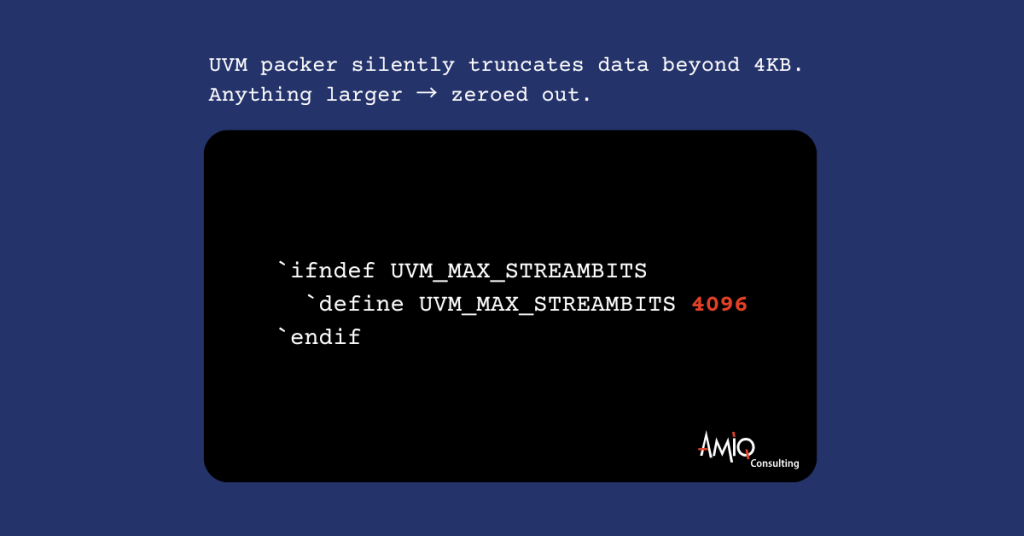

This limitation comes from the default value of macros defined in uvm_global_defines.svh, which is included in uvm_macros.svh:

// MACRO: `UVM_MAX_STREAMBITS

//

// Defines the maximum bit vector size for integral types.

// Used to set uvm_bitstream_t

`ifndef UVM_MAX_STREAMBITS

`define UVM_MAX_STREAMBITS 4096

`endif

// MACRO: `UVM_PACKER_MAX_BYTES

//

// Defines the maximum bytes to allocate for packing an object using

// the <uvm_packer>. Default is <`UVM_MAX_STREAMBITS>, in ~bytes~.

`ifndef UVM_PACKER_MAX_BYTES

`define UVM_PACKER_MAX_BYTES `UVM_MAX_STREAMBITS

`endifNote the confusing aspect here: despite the name UVM_PACKER_MAX_BYTES suggesting bytes, the default value is set to UVM_MAX_STREAMBITS which is 4096. The actual implementation treats this as 4096 bytes, not bits. This means the packer has a 4KB (4096 bytes) limit. When you try to pack or unpack objects larger than this limit, the data beyond it is silently truncated to zeros.

A Practical Example

Let’s demonstrate this issue with a simple example:

import uvm_pkg::*;

`include "uvm_macros.svh"

class amiq_base_item extends uvm_sequence_item;

`uvm_object_utils(amiq_base_item)

rand bit field_1;

rand bit [16:0] field_2;

rand bit [7:0] field_3[];

function new(string name = "amiq_base_item");

super.new(name);

endfunction

virtual function void do_pack(uvm_packer packer);

`uvm_pack_intN(field_1, 8)

`uvm_pack_intN(field_2, 16)

foreach (field_3[i])

`uvm_pack_intN(field_3[i], 8)

endfunction

endclass

module top;

amiq_base_item item = new;

bit [7:0] packed_bytes[];

initial begin

// Create and randomize the item with a large array

item.field_1 = 1;

item.field_2 = 17'h1FFFF;

item.field_3 = new[8000]; // Larger than 4096 bytes

// Fill with incrementing non-zero values

foreach (item.field_3[i])

item.field_3[i] = (i % 255) + 1; // Values 1-255

// Pack the item into a byte array

void'(item.do_pack(packed_bytes));

// Print values around the 4KB boundary from the packed byte array

for (int i = 4094; i < 4100 && i < packed_bytes.size(); i++) begin

$display("Packed byte[%0d] = %0h, Expected value = %0h", i, packed_bytes[i], item.field_3[i-3]);

end

end

endmodule

When you run this example, you’ll see that bytes 0-4095 match correctly between the original and copied packets, but bytes 4096 and beyond are all zeros in the copied packet.

The Possible Solutions for UVM Packer Size Limitation

There are two main approaches to solving this issue:

1. Redefine the UVM_PACKER_MAX_SIZE macro

This issue occurs because UVM has a default 4096-byte limitation for packed data. For transfers exceeding this size, UVM_PACKER_MAX_SIZE must be properly set.

The default size limit for UVM_PACKER_MAX_SIZE is set to UVM_MAX_STREAMBITS (4096 bytes). In order to change this, you either need to redefine UVM_PACKER_MAX_SIZE or UVM_MAX_STREAMBITS to your needed size.

In order to redefine this argument you can add the following line:

// Redefine UVM_PACKER_MAX_SIZE

+define+UVM_PACKER_MAX_SIZE=<SIZE>

// or redefine UVM_MAX_STREAMBITS

+define+UVM_MAX_STREAMBITS=<SIZE>While simple to implement, this solution has a main drawback. It increases memory consumption for all UVM packer operations globally, impacting simulation performance across your entire testbench. If redefining provides sufficient capacity for your verification needs, this approach may be the simplest to implement; however, if you observe significant performance degradation or are dealing with extremely large data structures, you should consider implementing a custom packing mechanism similar to the one described in option 2.

2. Implement a custom packing mechanism

Creating a custom packing and unpacking methods provides a more robust solution for large data structures. This approach offers finer control over memory usage and supports arbitrarily large data structures without modification to UVM’s infrastructure.

The custom approach requires more initial development effort and careful implementation to ensure proper data integrity. However, it provides better long-term maintainability, targeted optimization for specific data types that need to exceed 4KB.

Below you can find an example of a packing function that could be used as a replacement for the do_pack() UVM function:

function byte unsigned[] amiq_do_pack();

int size = 1 + 3 + field_3.size(); // 1 byte for field_1, 3 bytes for field_2, N bytes for field_3

byte unsigned packed_data[];

// Allocate the array

packed_data = new[size];

// Pack field_1 (1 bit, but using a full byte)

packed_data[0] = field_1;

// Pack field_2 (17 bits, using 3 bytes)

packed_data[1] = (field_2 >> 16) & 8'hFF;

packed_data[2] = (field_2 >> 8) & 8'hFF;

packed_data[3] = field_2 & 8'hFF;

// Pack field_3 (byte array)

for(int i = 0; i < field_3.size(); i++) begin

packed_data[i + 4] = field_3[i];

end

return packed_data;

endfunction

To implement this solution, simply replace the standard packing call

void'(item.do_pack(packed_bytes));with our custom function

packed_bytes = item.amiq_do_pack();This substitution will utilize the custom packing logic we’ve created above to generate the byte array, bypassing UVM’s 4KB limitation.

We should also consider the potential drawbacks of this custom packing approach. It deviates from UVM compliance, which could create integration challenges when components are reused across different verification environments. Moreover, this implementation is specifically designed for byte array serialization, whereas the standard UVM packer provides more versatile options such as pack_bits(), pack_ints(), etc. to accommodate different serialization needs.

Conclusion

The 4096 bytes limit in UVM’s packer is a common source of hard-to-debug issues in environments dealing with large data structures. By understanding this limitation and implementing one of the solutions above, you can avoid corrupted data when working with packets larger than 4KB.

Remember that silently corrupting data beyond the 4KB limit can lead to false passes in your verification environment, which is particularly dangerous as it might mask real issues in your design.